A little over a year ago, a researcher working on Google’s “AI”-powered chatbot claimed that the language model was sentient. This misunderstanding showed clear need for public conversations about sentience and technology, made clearer still by headlines in the months that followed:

“The New AI-Powered Bing is Threatening Users. That’s No Laughing Matter.” (Tiku)

“ChatGPT in Microsoft Bing goes off the rails, spews depressive nonsense.” (Adorno)

“Microsoft’s AI chatbot tells writer to leave his wife.” (Wace)

Fervor has calmed, but it hasn’t negated our need to come to terms with the ways we understand our relationship with computer “intelligence.” This context makes it appropriate to stake a claim:

Durrell’s under-studied two-book sequence The Revolt of Aphrodite (1968–1970) is currently his most relevant work.

As books about determinism and free will, they are ultimately about what it means to be human. They also question two sides of human intelligence: understanding and creation. By showing both computer understanding and computer creation, they invite us to engage with the idea of what these things mean in ways that are increasingly important.

The first book of the series, Tunc (1968), explores some models of computer understanding using Charlock’s computer system “Abel,” which offers “the illusion of a proximate intuition.” As Charlock explains, this system’s primary role is to uncover truth in hints from the world. Since “Abel cannot lie,” it offers the opportunity of having “All delusional systems resolved” (Durrell, Tunc ch. 1). Still, Charlock cannot help wondering “Where is the soul of the machine?” (Durrell, Tunc ch. 6).

Even Abel’s impressive abilities show that it’s limited to digital understanding. What we’ve seen lately is an explosion in digital creation. Although relatively new in its domination of our news cycles, computer-generated art, or computer creation, has been around awhile. Margaret Boden points to the creativity offered by many generative systems, including that of the artist Harold Cohen, who began working on his computer system in 1972 (7–9). Cohen’s system AARON planned its own images and drew them, eventually coloring them, too. In this way, AARON was more capable than Abel, whose only job was to interpret.

Published a couple years before Cohen began working on AARON, Nunquam (1970), the second part of Durrell’s series, makes similar moves from computer understanding to computer creation. Expanding beyond Abel to the ambulant robot “Iolanthe,” this new system can also respond to and, in turn, prompt new scenarios in the world around it. It is “strange and original, a mnemonic monster” (Durrell, Nunquam ch. 2). While Abel can understand, the robot Iolanthe can also create. Again, the text questions the computer system’s humanity: “How free was the final Iolanthe to be? Freer than a chimp, one supposes…” (Durrell, Nunquam ch. 4).

Practitioners of digital humanities (DH) have tended toward Abel, to stay on the understanding side of the divide. This hasn’t meant sacrificing soul to machine. There’s always still a human at the keyboard, devising a question, applying it to material, and making sense of results. Using DH tools, we might, for instance, draw inspiration from Charlock’s method of pouring data into a model to see what it can do. The image below represents just a minuscule portion of a word embedding model derived from more than 2.3 million words written in a selection of Durrell’s works: 18 books of fiction, 6 travel books, 3 books of essays and letters, 1 book of poetry.1 The computer has no understanding of the meanings of these words, but it can calculate semantic similarity to place words in neighborhoods of meaning. The words “eye,” “face,” and “hands” are gathered in the first cluster because they’re as like each other as the grouping of “day” and “night” in the second, as “house” and “room” in the third, as “herself,” “himself” and “myself” in the fourth.

Three-dimensional reduction showing 300 words of a word-embedding model trained on Durrell’s writing. Regions are colored to clarify proximity. Rotate by dragging, adjust zoom by scrolling, and toggle regions by clicking on the legend.

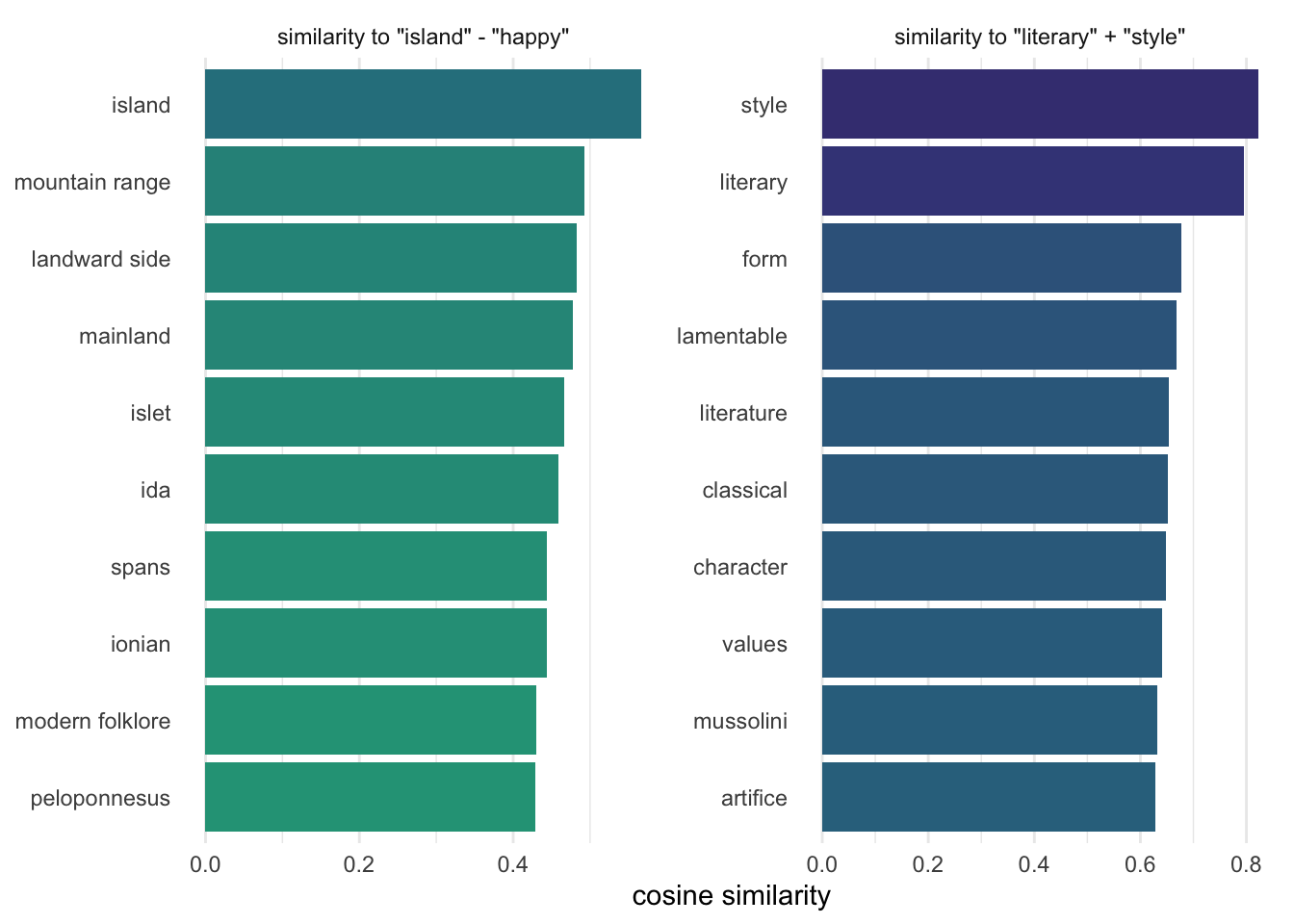

We can go further, using this model to ask simple questions delving the worlds created within Durrell’s network of words, shown in the next two charts. The first uses the model to find words in his writing nearest in concept to an island without happiness (“island” minus “happy”). Results suggest that Durrell’s islands are happier when they shift focus to the sea, since mountain ranges and the mainland are where happiness isn’t. The second uses the model to find words associated with discussions of literary style (“literary” plus “style”). Here, consideration for “form” and “classical” features are unremarkable inclusions. But among the top ten is the word “artifice,” as perhaps suggesting a façade. Does the model suggest that a particular literary style may in fact be artificial or played up?

Digital humanities techniques like word embedding models use a computer to probe the connections among all the words in Durrell’s writing, inferring implicit connections that aren’t there explicitly. But as we can see, not every association will be satisfying. An incomplete model will offer its own kind of “hallucinations,” like those shown by the robot Iolanthe before she was fully operational: “For such low-voltage feeding it was remarkable to find her ‘live’ at all. […] But of course with a current so far below optimum the threads had got jumbled as they do in an ordinary delirium—in high fever for example” (Durrell, Nunquam ch. 4).

By contrast, the kind of computer creation offered by more complex systems opens new doors for exploration, inspires new questions worth asking. In the following example, OpenAI’s ChatGPT 3.0 was provided two paragraphs from early in Durrell’s Justine, beginning with “At night when the wind roars” and ending with the line “It is the city which should be judged though we, its children, must pay the price” (Durrell, Justine pt. I). Asked to continue the text, the model appropriated Durrell’s themes and styles, effectively role-playing a metafiction narrative to say that “the manuscript broke off abruptly” before sharing the start of a made up “fragmentary letter from Justine, written from Corfu.” The letter begins like this:

Dear Pursewarden,

I have read your manuscript at last. I see that you understand Alexandrian life at last and that it is because you have understood it that you have been able to write so clearly. I am glad that you have come to a decision. I have been waiting for your letter for a long time. I know for certain now, for my own peace of mind, that you will never write again. I know that you will never return to Alexandria. It is all too painful. I can’t bear to see my life and Nessim’s so clearly grasped and understood by you.

But I am glad that you have understood it. Standing at the window now I can see the sea lying like a ventral plane of glass, rippled and blue, between two cliffs and I think of the city and of Melissa and of you all — of how you were all so dearly loved. (“‘At Night When the Wind Roars…’ [Prompt]”)

The model is good at evoking Durrell’s style. The proper nouns are even unsettling: Pursewarden and Corfu, both unmentioned in the prompt, belong to Durrell’s world, so the computer has included them. But how has it done so? Does the model know more than it’s letting on? From Nunquam, we see a similar moment of discomfort and explanation when the robot knows more than expected: “We had even forgotten (how is this possible: please tell me?), we had forgotten that she would know all about us, even our names” (Durrell, Nunquam ch. 3). Not unlike Iolanthe, ChatGPT’s response shows that it has probably been trained on The Alexandria Quartet sufficiently to recognize the prompt, to reference unmentioned characters in those books, even to extrapolate further by adding locations in the orbit of Durrell’s oeuvre.

More surprising may be the adjective “ventral” in the second paragraph to describe the sea as a plane of glass. The word is almost unused in Durrell’s writing—nowhere evocatively among the 28 books in my digital collection—but it feels so right. How?

Using large language models like ChatGPT in this way seems useful for exploring the borders of our own expectations. Cohen called his AARON program not “an expert system” but “an expert’s system” — a “research tool for the expansion of my own expert knowledge” of art (847).

We might conceive of using these new AI tools in a similar way.

Generative models offer opportunities for the expansion of our understanding, serving us in our pursuits as readers because of their ability to generate opportunities beyond what extant methods of digital analysis reveal. Nevertheless, the moment leaves us with many questions.

How might studying methods of digital creativity further our understanding of non-digital works of art? What does our reaction to the computer’s mimicry of Durrell’s style reveal about that target style and our understanding of it? And how does it engage with or challenge our notions of authenticity?

Cohen has also described style as “the signature of a complex system” (855). Is this kind of generative system a forgery, then, or something else? When a system gives us something that isn’t Durrell yet which is still recognizably in the style of Durrell, where are we left to situate our understanding of signature?

These questions are left unanswered, but they further endorse The Revolt of Aphrodite—the story of a robot struggling to understand its own artifice—as Durrell’s most relevant work for engaging with the present moment. Iolanthe’s revolt is nearly successful, but her robotic failure shows what it means to be human. In the same way, generative AI’s successes and failures offer new angles to study creativity.

Déjà Vu

In modified forms, this post was presented in February 2023, at the Louisville Conference on Literature and Culture since 1900, and shared in the newsletter of the International Lawrence Durrell Society, The Herald.

References

Footnotes

The model and subsequent visualizations were made in R using Hadley Wickham et al.’s “tidyverse” suite of packages and Ben Schmidt’s “wordVectors” package to implement “word2vec,” by Tomas Mikolov et al. The interactive figure was made with Carson Sievert’s “plotly” package.↩︎

Citation

@misc{clawson2023,

author = {Clawson, James},

title = {Rejections of a {Machine} {Venus}},

date = {2023-10-16},

url = {https://jmclawson.net/posts/rejections-of-a-machine-venus/},

langid = {en}

}